Software Engineering involves the design, coding, testing, and debugging of electronic computer software.

This page is intended to provide some history of computer processor architectures used as spacecraft guidance systems and others that are more practical for the hobbyist or student to work with. For those processors that are more practical for hobbyists and students of robotics, the page provides pointers to software that can help with the engineering of micro controller software. Any software referenced here is either free, inexpensive, or demo software so it can be tried without initially investing significant money. Where possible we've included a link to the site where you can get the latest version rather than a direct download of a possibly stale version. All CPU software development tools mentioned here will be for 16-bit or 8-bit micro controllers since there are plenty of Linux, Unix, VxWorks, and Windows based tools for most 32-bit and 64-bit CPUs. Also, 32-bit and 64-bit CPUs typically consume more power than 16-bit and 8-bit micro controllers and thus can't last as long without being recharged.

The most fundamental development tool you will need to develop software for a micro controller is an assembler. For the beginner, an assembler takes a text source file of processor architecture specific assembly source code and converts it into bytes of that processor architectures machine code. This machine code is typically written to a *.bin file (binary file format), *.hex file (hexidecimal file format), or sometimes a *.s19 file that can be uploaded to the controller or burned into its PROM. The file you can load to a particular microcontroller will depend on the type of memory (PROM, EPROM, EEPROM, flash, etc.) the microcontroller chip or board uses and on the download interface software or PROM programming software you are using.

A higher-level programming language compiler can be used to generate the assembly source code or some will create a machine code binary file directly.

While it's easier to write source code in a high-level language than in assembly source code, it might be necessary to have more memory in your controller to load an interpreter or runtime or even more to support garbage collection. Along with the language you use, you might also leverage a library or framework of pre coded procedures, functions, or methods to save time from having to code everything from scratch. Additionally, you might want to use a basic I/O subsystem (BIOS) , executive, or real-time operating system (RTOS), but again these take even more resources on your controller.

Other helpful tools include applications that allow you to test and debug the execution of the machine code you've created. If these tools are designed to work with the controller board, they can be used to upload the binary to the actual controller. Debuggers also exist that allow you to test the code without the hardware. They do this by simulating on your desktop computer most of the behavior of the controller. While a simulating debugger might be easier to configure, you won't know the code really works the way you want it to until you upload it to the hardware. These tools are all specific to the controller or processor architecture family that you're developing for. The links below will help you find what you're looking for.

While assemblers, compilers, and debuggers, are all specific to the controller or processor architecture family that you're developing for, software written in higher-level programming languages does not need to be controller or processor specific. So if you have software for an algorithm written in C, and you have a C compiler for a Z80 processor and a C compiler for an AVR processor, you could compile the same software for either processors by using the appropriate compiler. Some common software modules that you might need to code for are listed below.

A real time operating system or executive is different than early desktop or disk operating systems such as CP/M, ProDOS, or MS-DOS. The former allows multiple task or processes to execute at once and has a timer to interrupt processes and change what is executing. The later focused on allowing users to store and retrieve files from permanent storage, but typically only allowed one process to execute at a time. A real time operating system or executive has defined time constraints. Processing must be done within the time constraints or sensor data might be lost and control actions might not occur at the correct time. Thus, most common older operating systems for small systems are not applicable for control systems. Linux, Unix, and Windows 32-bit and 64-bit operating systems are designed for multitasking and have timers to interrupt processes to enable this. This makes them capable of having real time variants that can be used in control systems.

Real time operating systems are used to enable one processor to handle multiple tasks or processes concurrently. Such tasks might involve flight control, navigation sensor readings, communications, telemetry sensor readings, and even power management. While these tasks might need action taken at almost the same time, they typically don't take very much computing time to handle. Thus by switching between the tasks they can all be handled by one processor. This is what was done in systems like the Apollo Guidance Computer (AGC). This was more important when processors were larger and heavier. However, an alternative sometimes now employed is to have very small processors decicated to each task. These dedicated processors then communicate through a communications link to other processors or a master processor like the flight controller. While processors and smaller and lighter than in the past, they still consume power and the more hardware complexity there is the more chance there is for something to fail.

Navigation, guidance, and control software or flight control software aims to bring a spacecraft to a specific goal or to complete a specific task. Programming such software is typically done by modeling the location or mechanical items or the direction of force being applied within a coordinate system. This requires a lot of math calculations to be performed. However, the most basic processor architectures only do integer math, and sometimes only integer addition and subtraction with no multiplication or division. In order to support math with real numbers, fixed point or floating point math can be done. In fixed point math, integers are used to represent real numbers, but they're assumed to represent fractional units so the integer value 1 might represent 1/256th. In floating point math, a structure of two integers is used to represent real numbers, where one represents a mantissa and one the exponent. More modern 32-bit and 64-bit processors have special floating point instructions and hardware designed to handle the calculations without software. This is a long way from the need to support square, square root, vectors, and trigonometric functions like sine, cosine, and tangent in order to calculate positions of things in a coordinate system. In fact, the Apollo Guidance Computer (AGC) and other lower power systems didn't use floating point for real numbers, but did have software to support vectors and trigonometric functions. It did this by using fixed point and assuming integers were fractions of a unit circle.

Software that uses sensors, possibly combined with maps, to determine a crafts location is navigation software. Simultaneous localization and mapping (SLAM) is the software problem of keeping track of a location in an environment at the same time as constructing a map of that environment. Kalman filters are quite often used in the algorithms used to solve SLAM. Even before SLAM became an acronym, Kalman filters were used in the Apollo Guidance Computer (AGC) software when navigating on the way to the Moon.

Guidance software takes information from naviagation, and information about a desired goal location or outcome and uses it to guide control software. So while navigation software can tell where a craft is at in an environment, guidance software can tell a craft how to move or what action to take in order to get to a target destination or outcome. For example, Dijkstra's algorithm is an algorithm for finding the shortest paths between nodes in a graph, which might represent waypoints on possible paths through a terrain. Beyond Dijkstra's algorithm, the A* algorithm achieves better performance by using heuristics to guide its search for the shortest path.

Some processor architectures used as controllers are described below. A common question is "what micro controller should I use...?" My advice would be to choose a controller that has a full set of development tools if your strength is electronics and a controller that has all the hardware you need built in if your strength is software. While architecturally the 8096 architecture was one of my favorite processors, the lack of availability of chips and tools makes it impractical to use anymore. I would currently recommend either an AVR processor on an Arduino board or a Z80 processor on any number of boards or on a build yourself board.

Some processor architectures are described as being RISC processors. RISC stands for Reduced Instruction Set Computer. The meaning of RISC is not that the processor architecture has a reduced set of instructions, but rather that the complexity of the instructions is simpler, typically having few if any addressing modes beyond loading memory addressed by a register, and all math and logic operations working only on registers. This leads to code patterns of loading registers from memory, doing operations on them, and storing them back to memory. Thus, RISC architecture processors are also referred to as load-store architecture processors. Processor architectures designed with complex addressing modes are described as being CISC processors. CISC stands for Complex Instruction Set Computer. A CISC processor will typically have a mix of addressing modes allowing for addressing memory with registers, constants, register + constant, with the value that a register points at, and will have options such as auto incrementing or auto decrementing of registers, all in combination with math and logic operations. The advantage of RISC processors is that without instructions with complex addressing modes, instructions can be pipelined with multiple instructions being executed each at different stage of the processor at the same time. While RISC instructions might not have addressing modes that might be convenient to programmers or compiler developers, they allow instruction parallelism that allows processers to execute instructions faster. RISC processors also typically require fewer transistors to implement than similar CISC processors and thus can consume less power. On the other hand, CISC processors typically provide better code density allowing similar programs to take less memory. Note that because of misunderstandings of the term RISC and because of processor manufacturers trying to market their processors as the more modern RISC architecture versus older CISC architecture, sometimes processors described as RISC aren't really RISC processors. There are other processors types beyond CISC and RISC, such as VLIW or Very Large Instruction Word, but these are not as common and none are represented here.

Processors are also described as 8-bit, 16-bit, 32-bit, and 64-bit. There's even a 15-bit processor decribed below that was used in the Apollo spacecraft and a 24-bit processor that was used in the Viking landers on Mars. This terminology can also be misunderstood because it can refer to different characteristics of a processor. It typically refers to the number of bits a processor handles at one time. However, with the variety of processor designs, many processors don't do everything they do with the same number of bits. A processor might do two 4-bit add operations internally in order to do an 8-bit add, but as far as the programmer is concerned it appears to be an 8-bit processor. Another processor might do two 16-bit add operations internally and externally in order to do a 32-bit add, but as far as the programmer is concerned it appears to be an 32-bit processor. Similarly a processor might also do two 8-bit add operations internally in order to calculate a 16-bit memory address, but since most other math and logic operations are 8-bit, as far as the programmer is concerned it appears to be an 8-bit processor.

Other characteristics of processors describe their memory access or their register layout. Processors with a single memory space for code and data are described as von Neumann architecture. Processors with separate memory spaces for code and data and possibly multiple data are Harvard architectures. Processors where I/O operations don't have a seperate address range are said to use memory mapped I/O. Some processors view memory as a single flat address space, while others have banks of memory or segments of memory. Memory banks and memory segments are sometimes used as ways of increasing a processors memory address space, but can also be used to draw a distinction between code and data memory in von Neumann architecture processors. In desktop and server computers, flat memory is generally prefered, but in embedded systems its preferable to distinguish between read only code and data, which can reside in read only memory (ROM), versus data that needs to be in read write memory. Also, some processors address their registers just like they're addressing a particular subset of data memory locations. Others have instruction encodings that specify registers differently than addressing data memory. Further, some processors are a mix that have very few registers, but have addressing modes that use subsets of data memory as additional registers that point to memory. There's examples of each of these below.

AGC (MIT Instrumentation Laboratory, Raytheon - 1966)

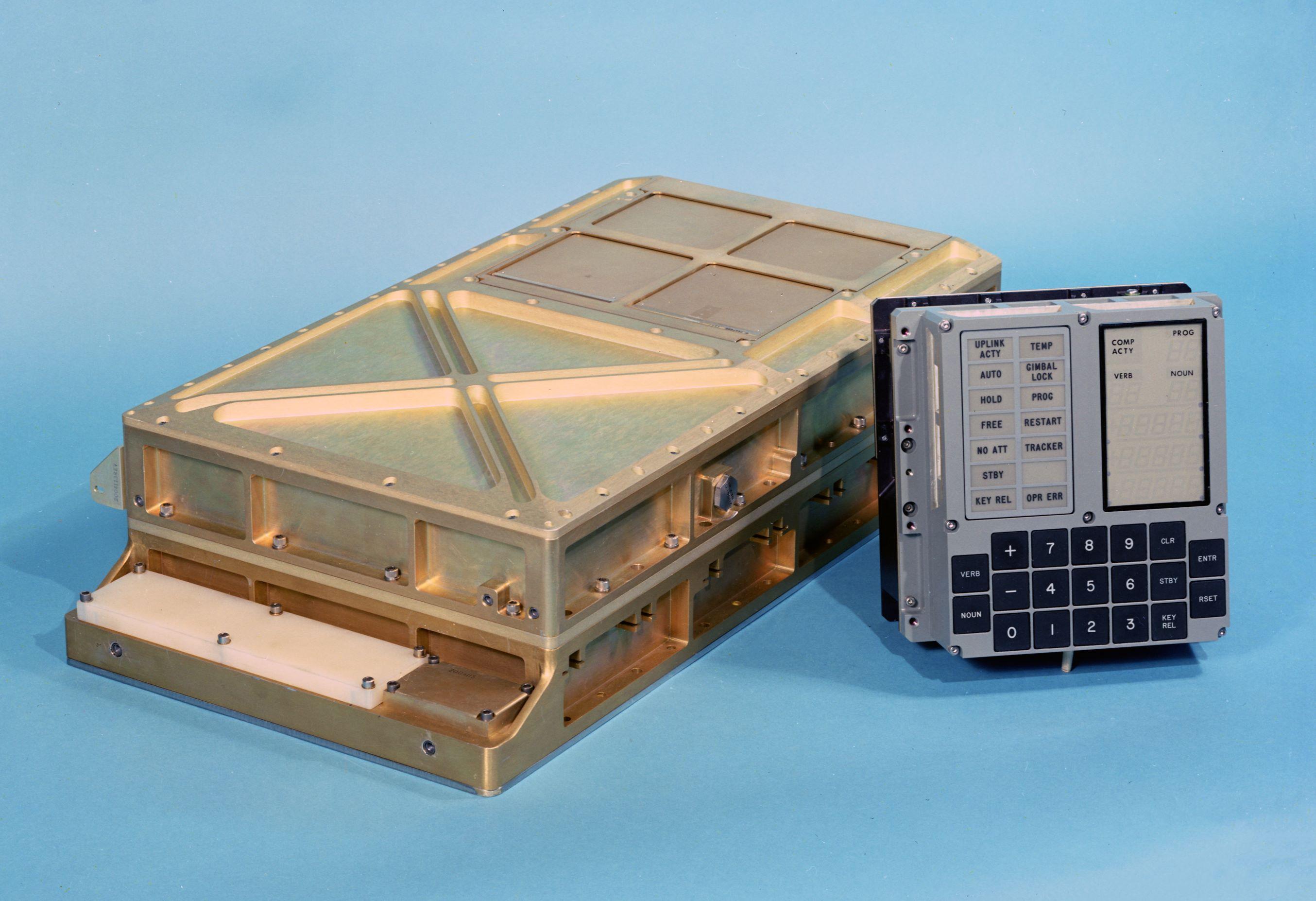

The great grand ancenstor of them all. One of the first computers built from integrated circuits. In some ways, the first personal computer, since it had a calculator type keypad called the DSKY. It ran a simple real-time control program with pre-emptive scheduling called the Executive which was cutting edge at the time compared to batch scheduling systems.

The AGC had instructions and data that were 15-bits rather than the multiple of 8-bits which is common today. Like the later 8080/Z80 and 8086/8088 series, I/0 has its own address space. The accumulator is 15-bits, but some operations can be done on 30-bits. It addressed its registers like it was addressing low memory address locations. It included integer multiply and divide instructions in hardware which many other early processors here don't include.

The AGC computers were:

Here is a Programmer's Manual for Block 2 AGC Assembly Language including processor architecture description.

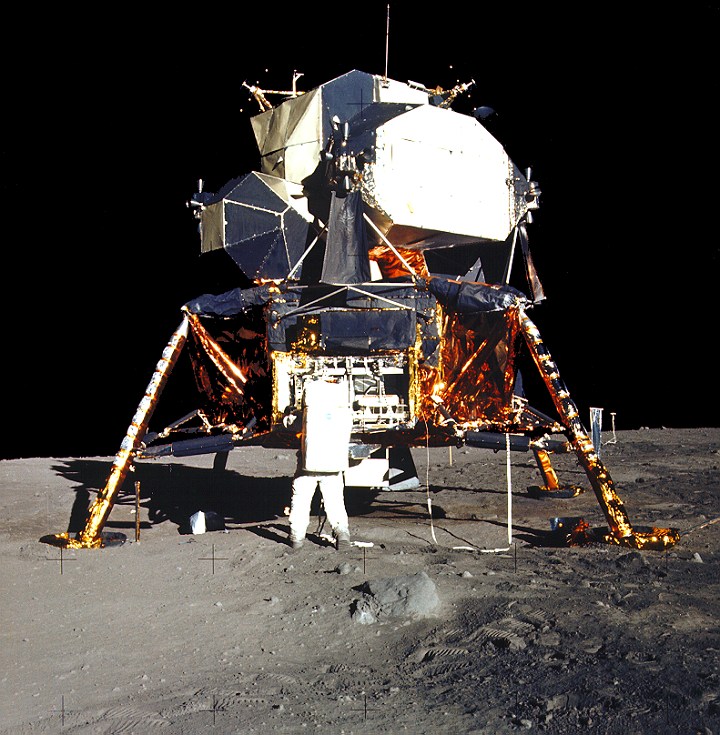

Apollo missions to the Moon (with the exception of Apollo 8, which did not have a Lunar Module) had two AGCs, one in the Command Module and another in the Lunar Module.

Apollo 11 was the first manned mission to land on the Moon.

The Apollo Guidance Computer: Architecture and Operation (Springer Praxis Books)

Digital Apollo: Human and Machine in Spaceflight (MIT Press)

HDC 402 (Honeywell - ~1971)

teraKUHN has not been able to locate definitive documentation from NASA, Martin Marietta (now Lockheed Martin), Honeywell, nor JPL on the details of the HDC 402. However, we suspect that this was a variant of the DDP-124 computers processor architecture designed by the Computer Control Company (aka 3C). The DDP-124 was the successor to the DDP-224, used for simulating AGCs for Apollo simulations, and the even earlier DDP-24. Honeywell had acquired 3C in 1966, well before Martin Marietta subcontracted the Viking computer to Honeywell in 1971. Other 24 bit systems Honeywell had access to would be variants of the Honeywell 300, GE 425, GE 435, GE/PAC 4020, or maybe 48 bit Honeywell 400, Honeywell 1400, Honeywell 800, or Honeywell 1800. GE's computer business was acquired by Honeywell in 1970. If you have any information that can confirm or disprove this suspicion, please email us at the teraKUHN project.

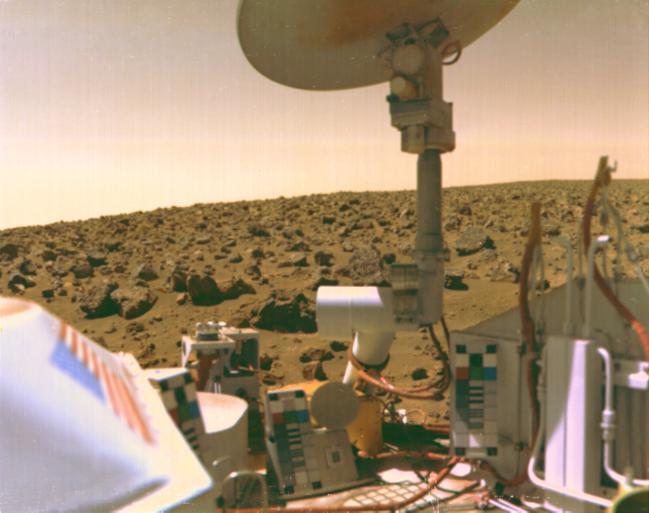

The Viking lander Guidance, Control, and Sequencing Computers (GCSC) were described as:

Here is a 3C DDP-124 brochure including processor architecture description. It's processor had a 24 bit word size, 48 instructions including the Halt instruction which would never be used on a spacecraft, and up to 3 index registers. This seems similar to the description of the HDC 402 attributes in the Viking landers GCSC. However, some documentation indicates that the DDP-24, DDP-224, and DDP-124 used sign magnitude arithmetic while the GCSC's HDC 402 is listed as using 2's complement arithmetic.

While unrelated to either the HDC 402 or DDP-124, note that the NASA SSME controller digital computer unit (CDU) was documented as consisting of two computers 'with the same functional characteristics as the Honeywell HDC 601 and DDP-516'. Also, there are NASA documents that indicate that the DDP-516 and DDP-316 assembly language was the same as the Honeywell HDC 601, so there is evidence that Honeywell rebranded 3C DDP systems as HDC systems.

Two HDC 402 processors were used in each GCSC that controlled the Viking landers that landed on Mars.

Viking 1 was the first successful lander on Mars.

On Mars: Exploration of the Red Planet, 1958-1978--The NASA History (Dover Books on Astronomy)

NSSC-1: NASA Standard Spacecraft Computer (1974)

Included for completeness. The NSSC-1 or the NASA Standard Spacecraft Computer-1 was a computer developed at the Goddard Space Flight Center (GSFC) in 1974. The computer was designed with an 18-bit processor, which was created because it gave 4x more accuracy than typical 16-bit processors. The NSSC-1 had development tools hosted on Xerox XDS 930 mainframe.

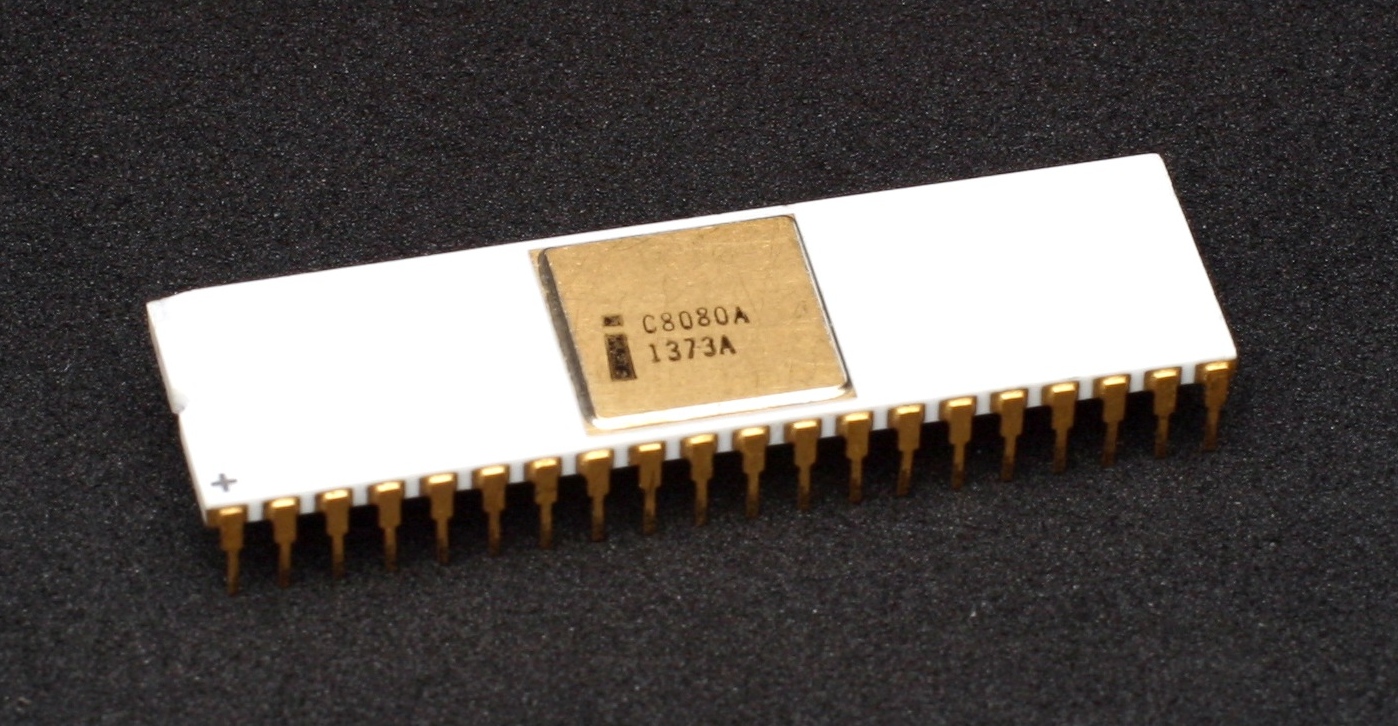

8080 / 8085 (Intel - 1974)

The grand dad of all micro processors, the first micro processor or micro controller. Although dated and very complicated in wiring, there is a wide range of software and tools for them. This includes most CP/M (aka CP/M-80) software. The later 8085, Z80, and other descendents had much simpler wiring, so any work done today would probably be on those successors. See the Z80 below. Many manufacturers supply processor variants built on the 8080 or later Z80 architectures.

The 8080 was used in the Altair 8800 which was designed in 1974 by MITS. The Altair is recognized as the first commercially successful personal computer. The 8080 was also used in the IMSAI 8080 which was the first clone computer. These machines, and others based on the 8080, could run the CP/M operating system which meant that they could share software written for CP/M.

The 8080 has a von Neumann architecture in which instructions and data share the same space addressed by 16-bits. However, like the later 8086/8088 series, I/0 has its own address space. The accumulator is 8-bits, but some operations can be done on 16-bits. In addition to the accumulator, the processor contains 6 8-bit registers, which can be paired up to be used as 16-bit registers, and it has a 16-bit stack pointer.

The 8080/8085 processors had:

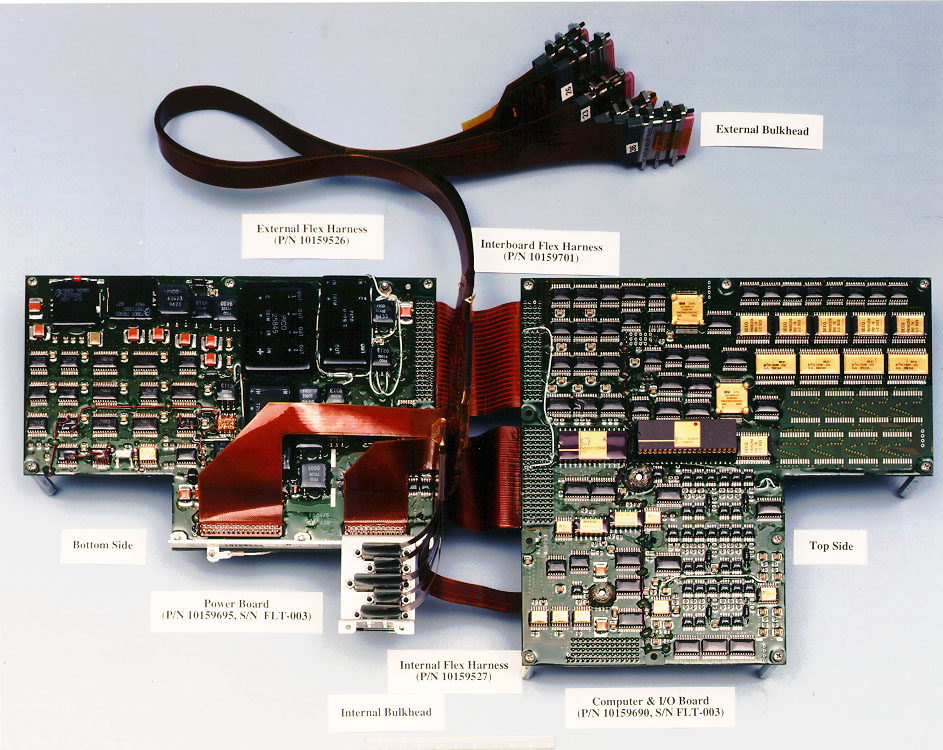

Further the Sojourner rover Control and Navigation Subsystem had:

A radiation hardened 80C85 was used on the Sojourner rover that flew with the Mars Pathfinder spacecraft.

Sojourner was the first successful rover on Mars.

Unlike the executives for the Apollo AGC and the Viking GCSC or VxWorks used in later Power based spacecraft control systems,

the core software in the Sojourner rover 8085 code did not use a 'time sharing' or multi-tasking executive.

This was possible because the Sojourner rover Control and Navigation Subsystem didn't control time sensitive space navigation or landing which those other systems did.

Instead the Sojourner rover 8085 code had a simple control loop that executed commands sequentially.

An 8085 was also used in the Fast Auroral Snapshot Explorer (FAST) satellite,

and in each of the 5 Time History of Events and Macroscale Interactions during Substorms (THEMIS) satellites,

including the 2 renamed as Acceleration, Reconnection, Turbulence and Electrodynamics of the Moon's Interaction with the Sun (ARTEMIS) spacecraft which were moved into Lunar orbits.

Sojourner: An Insider's View of the Mars Pathfinder Mission by Andrew Mishkin (2003-12-02)

for Android smartphones

for Android smartphonesZ80 (Zilog - 1976)

If the 8080 is the grand dad of all micro processors, then the Z80 is grand dad's brother. Zilog was started by engineers who left Intel after working on the 8080 microprocessor. The Z80 is capable or executing the same binary code as the 8080/8085, but the Z80 has a lot of additional instructions and addressing modes. The Z80 isn't as complicated as the 8080 when it comes to wiring one up. It is also a multi functional chip with a lot of built in hardware options. Although very dated it is still very popular due to its very low cost, availability, and wide range of development tools. This includes all CP/M (aka CP/M-80) software. Additionally MSX home computers used this processor and ran the MSX-DOS operating system. Also the Z80 processor was used in many TI graphing calculators, most Tandy TRS-80 home computer models, the Timex/Sinclair home computers, as well as others.

Here is a Z80 CPU Users Manual including instruction set and processor architecture description.

The Z80 has a similar architecture to the 8080/8085 with code and data sharing the same 16-bit address space but with a seperate address space for I/0.

The accumulator is 8-bits, but some operations can be done on 16-bits.

It has and handful of 8-bit explicit registers like the 8080/8085, which can be paired up to be used as 16-bit registers, and a couple of 16-bit registers.

The Z80 uses little endian storage.

In other words, if a data word is located at address xxx4h,

then the low byte of that data word is located at address xxx4h,

and the high byte of that word is located at address xxx5h.

Z80 Microprocessor

Other controllers in this family include: HD64180, TLCS-Z80, Z180, Z380, eZ80, and Rabbit 2000. The eZ80 added integer multiply instructions.

for Android smartphones

for Android smartphonesWhile Zilog went in one direction with the 8080 architecture trying to builds controller chips, Intel went in another. Intel designed the 16-bit 8086 and 8088 which could not execute the same binary code as the 8080/8085, but that had similar assembly instructions so that 8080/8085 code could be translated to 8086/8088 assembly code. The 8086 and 8088 lead to the 80286 (or just 286), the 80386 (or just 386), the 80486 (or just 486), and then to the Pentium. Decendents of this architecture, often just called x86, are used in most PCs and laptops.

6800 / 6801 / 6802 / 6803 / 6805 / 146805 / 6808 (Motorola - 1974)

The grand mother of them all, the main competitor in the days of the 8080 micro controller. Although very dated, the basic design lived on in later 68 hundred series processors from Motorola. It's quite often possible to assemble code written for a 6800 to run on later 68 hundred processors. It's even possible to execute some code built for a 6800 on later 68 hundred processors since most have super sets of the 6800 instruction set. Variants of this processor were used in GM automotive computers that control fuel injection.

The 6800 has a von Neumann architecture in which instructions and data share the same space and I/0 is memory mapped all in the same 16-bit address space. There are two 8-bit accumulators, and some operations can be done on 16-bits. While it has explicit registers, some instructions can use low memory address locations as other registers or pointers to other memory.

Other controllers in this family include: Freescale now NXP HCS08 series.

68HC11 (Motorola - 1985)

The once popular 68HC11 is an 8-bit data, 16-bit address micro controller from Motorola with an instruction set that is similar to the older 68 hundred parts (6800, 6801, 6802, 6803, 6805, 6808). This is a multi functional chip with a lot of hardware built in. The 68HC11 was superseded by the HC16 or M68HC16 and HCS12 or MC9S12.

The 68HC11 has a von Neumann architecture in which instructions, data, and I/O all share the same memory space addressed with 16-bits. Depending on the variety, the 68HC11 has built-in EEPROM, RAM, digital I/O, timers, A/D converters, PWM generator, pulse accumulator, and synchronous and ansynchronous serial communications channels. There are two 8-bit accumulators, and some operations can be done on the 16-bit pair of these two accumulators. While it has explicit registers, some instructions can use low memory address locations as other registers or pointers to other memory. It includes integer multiply and divide instructions. The 68HC11 uses big endian storage. In other words, if a data word is located at address xxx4h, then the high byte of that data word is located at address xxx4h, and the low byte of that word is located at address xxx5h. A radiation hardened versions of the 68HC11 have been used in communications satellites.

6502 / 6507 / 6510 / 65c02 / 65802 (MOS Technology - 1975)

MOS Technology was originally started to provide a second source for electronic calculator chips. However, similar to how Zilog formed from engineers who left Intel, MOS Technology had an influx of engineers who left Motorla after working on the 6800 microprocessor. Cheaper than the 8080 or 6800 when introduced, this processor ended up in a number of early home computers. Chips from this family were used in Apple II, Commodore 64/128/Vic20, and Atari 400/800/1200 home computers and Atari 2600 and and Nintendo NES game consoles. Although dated, the basic design lived on in the later 65816 from Western Design Centers which was used in the Nintendo Super NES game console and Apple IIGS. Since the 6502 ended up in so many inexpesive home computers it was quite often the first processor a lot of assembly programmers learned to write code for.

The 6502 has a von Neumann architecture in which instructions and data share the same space and I/0 is memory mapped. There is just one 8-bit accumulator. While it has explicit registers, some instructions can use low memory address locations as other registers or pointers to other memory. The 6510, found in Commodore 64 computers, has a built in I/O port. The Ricoh 2A0#, found in Nintendo NES game consoles, integrates a sound generator, and game controller interface. The 6502 uses little endian storage. In other words, if a data word is located at address xxx4h, then the low byte of that data word is located at address xxx4h, and the high byte of that word is located at address xxx5h.

Other controllers in this family include: 6507, 6510, 8502, Ricoh 2A0#, 65c02, W65802, W65c02, W65c134, W65c816, W65c265, MELPS xx740, Renesas 38000/740 series, and Renesas 7200.

While MOS Technology went in one direction making the 6502 architecture cheaper than the 6800, Motorola went in another. Motorola designed a number of variants to the 6800 for different controller chip applications. While it was quite often possible to assemble code written for a 6800 to run on later 68 hundred processor variant, they could not always execute the same binary code. Ultimately engineers at Motorola designed the 68000 (or 68 thousand or 68K) and the 6809 that were both 16-bit processors. These design projects overlapped in time. However, the 68000 could do 32-bit math by doing two 16-bit operations internally. This made it seem like a 32-bit processor. It was not possible to execute the same binary nor assemble code written for a 68 hundred to run on a 68 thousand. The 68000 eventually lead to the 68020 which was a true 32-bit processor based on the same architecture. This architecture, often just called 68K, was used in most early Macintosh PCs and computer workstations, used as a coproceesor in a number of Tandy TRS-80 computer models, and it was and still is used as an embedded controller.

CDP 1802 (RCA - 1975)

The successor to the 1801 which was the first processor implemented in CMOS. In 1976, the two chips making up the 1801 were integrated together creating the 1802. Some consider the 1802 to be a RISC like microprocessor because of it's large set of registers and limited addressing modes, but the instructions are not pipelined and each typically takes 8 clock cycles.

The 1802 has a von Neumann architecture in which instructions and data share the same space addressed by 16-bits. However, like the later 8086/8088 series, I/O has separate instructions. It had an 8-bit accumulator, D, which was used for arithmetic and to transfer values to and from memory. It had sixteen 16-bit registers, which could be accessed as thirty-two 8 bit registers.

Here is a 1802 Datasheet including processor architecture description.

Some variants of the 1802 were built using Silicon-on-Sapphire which is radiation hard. Since there were radiation hard versions, it was used on spacecraft including the Space Shuttle display controller, NASA Magellan, NASA/ESA Ulysses, and the NASA Galileo spacecraft. The 1802 has often been incorrectly claimed to have been used in the earlier Viking and Voyager spacecraft, but it was not. Variants of this processor were used in Chrysler automotive computers for electronic spark control.

PIC12* / PIC14* / PIC16* / PIC17* (General Instrument Microelectronics, MicroChip - 1976)

The PIC series are 8 bit processors and were very popular with hobbyists. They started off as a peripheral interface chip for General Instrument Microelectronics CP1600 16-bit processor; however they were found to be very useful in other control applications. The letters PIC were later changed to mean programmable intelligent computer. The PIC series have Harvard architectures and are RISC like. PIC processors typically have between 128 and 512 bytes of data RAM on chip addressed directly as 128 byte banks or through indirect addressing. However, unlike other processors, the PIC series can not easily address a full 64K of external data memory.

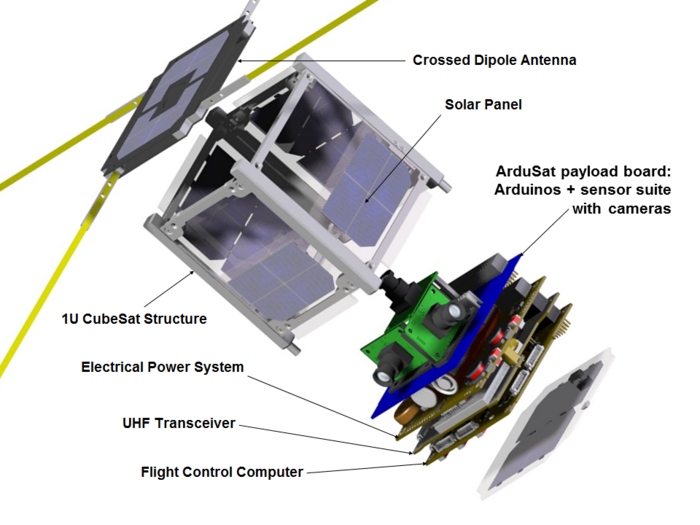

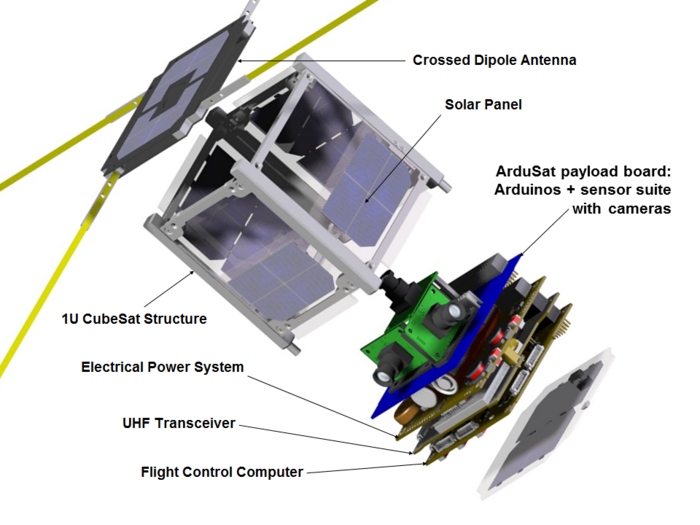

The processors used in earlier VEX Robotics were PIC architecture processors. The processors used in some CubeSats, including CubeSat kits from Pumpkin , are also PIC architecture processors.

MIL-STD-1750A (1980)

The military standard document MIL-STD-1750A (1980), is the definition of a 16-bit computer instruction set. It defined the processor to have sixteen 16-bit registers, and adjacent pairs could be used as 32-bit integer or floating point values, or adjacent triplets could be used for 48-bit extended precision floating point values. Since the architecture was a military standard, many manufacturers supplied variants of this processor.

The MIL-STD-1750A processor architecture was used in many spacecraft. A MIL-STD-1750A was used in the ESA Rosetta, NASA Cassini, NASA Mars Global Surveyor, and the Naval Research Laboratory Clementine Lunar Orbiter.

Here is a MIL-STD-1750A Datasheet including processor architecture description.

8051 / 8052 / 8031 / 8032 (Intel - 1980)

The 8051 was Intel's second generation of micro controllers. Although featuring a some what unique design from other processors, it is a very popular processor. A lot of software is available for the 8051 line. Many manufacturers supply what must be a hundred different processor variants of the 8051 architecture for any requirement.

The 8051 has a Harvard architecture with separate address spaces for program memory and data memory.

The program memory can be up to 64K.

The lower portion (4K or 8K depending on type) may reside on chip.

The 8051 can address up to 64K of external data memory, and is accessed only by indirect addressing.

The 8051 has 128 bytes (256 bytes for the 8052) of on-chip data RAM, plus a number of special function registers (SFRs).

I/O is mapped in its own space.

The accumulator is 8-bits.

The 8051 typically would not be used as a flight computer on a deep space spacecraft, but it has been used in satellites and in instruments carried by deep space spacecraft. For example, an 8052 was used in the Lunar Reconnaissance Orbiter LAMP instrument. The processors used in some CubeSats, including CubeSat kits from Pumpkin , are also 8051 architecture processors. Other controllers in this family include: MCS-151, 80C320, AT89 series (like AT89S52), CY8C3xxxx, HT85FXX, and XC800 family.

ARM (Arm Holdings - 1985)

ARM stands for Advanced RISC Machine, but originally stood for Acorn RISC Machine. While not a 16-bit or 8-bit micro controller, ARM architecture processors are included in this list as an example of the processor that is used in most smartphones. Additionally they are used in the BeagleBoard and Raspberry Pi project boards. The processors used in later VEX Robotics, Lego Mindstorm NXT, and some CubeSats are also ARM architecture processors. They are also used in some, but not most, Arduino boards. ARM doesn't fabricate chips, they just license the architecture of the processor to electronics manufacturers. Since the design is available for license, many manufacturers fabricate their own variants of this processor with custom capabilities.

to run 80c85/Z80 code on ARM Android smartphones

to run 80c85/Z80 code on ARM Android smartphones to run Arduino/AVR code on ARM Android smartphones

to run Arduino/AVR code on ARM Android smartphonesRTX2000 (Harris - 1988)

The RTX2000 and RTX2010 were decendants of the Novix NC4000 and NC4016 processors. They were all processors designed based on the Forth programming languages stack machine. The RTX2000 architecture was used for a number of spacecraft. An RTX2000 architecture processor was used on the Near Earth Asteroid Rendezvous - Shoemaker (NEAR - Shoemaker) spacecraft to the near Earth asteroid Eros.

Here is a RTX2010 Datasheet including processor architecture description.

Power (IBM - 1990)

While not a 16-bit or 8-bit micro controller, Power architecture processors are included in this list as an example of one of the currently most used processors in deep space spacecraft. Other 32-bit and 64-bit CPUs that might be used include SPARC architecture typically in ESA and ISRO missions or SuperH architecture typically in JAXA missions. The 32-bit and 64-bit processors tend to be RISC processors although CISC 386/x64 architecture processors sometimes get used in spacecraft.

The Power processor architecture was used in many NASA rovers and spacecraft.

A radiation hardened RAD6000 was used on the Mars Pathfinder lander, the MER rovers (Spirit and Opportunity), the Phoenix Mars Lander, and the DAWN spacecraft.

A radiation hardened RAD750 was used on the Mars Reconnaissance Orbiter (MRO), Lunar Reconnaissance Orbiter (LRO), LCROSS, Mars Science Laboratory (Curiosity), Perseverance, GRAIL, LADEE, MAVEN, and OSIRIS-REx missions.

The RAD750 is basically a radiation hardened version of the PowerPC 750 originally manufactured by Motorola.

The RAD750 is manufactured by BAE Systems and costs about $200,000 per unit.

Most of these NASA spacecraft used the VxWorks real-time operating system (RTOS) and software developed in C or C++.

The Curiosity rover is reported to have about 2.5 million lines of C code that executed in over 130 threads.

The processors used in the Gamecube, Wii, Wii U, PlayStation 3, and XBox 360 game consoles and the Power Mac PC are also Power architecture processors.

Roving Mars: Spirit, Opportunity, and the Exploration of the Red Planet

Mars Rover Curiosity: An Inside Account from Curiosity's Chief Engineer

MSP430 (Texas Instruments - 1992)

The MSP430 is a microcontroller family built around a 16-bit CPU. It is designed for low cost and low power consumption. The processors used in some CubeSats, including CubeSat kits from Pumpkin , are also MSP430 architecture processors.

The MSP430 has a von Neumann architecture in which instructions and data share the same space and I/0 is memory mapped. The MSP430 could be considered a simplified variation of the DEC PDP-11 processor architecture. The processor contains 16 16-bit registers, of which four are dedicated to special purposes. R0 is used for the program counter (PC), and R1 is used for the stack pointer (SP). The PDP-11 only had eight registers, with PC and SP in the two highest registers instead of two lowest. R2 is used for the status register. Addressing modes are a subset of the PDP-11. The MSP430 uses little endian storage. In other words, if a data word is located at address xxx4h, then the low byte of that data word is located at address xxx4h, and the high byte of that word is located at address xxx5h.

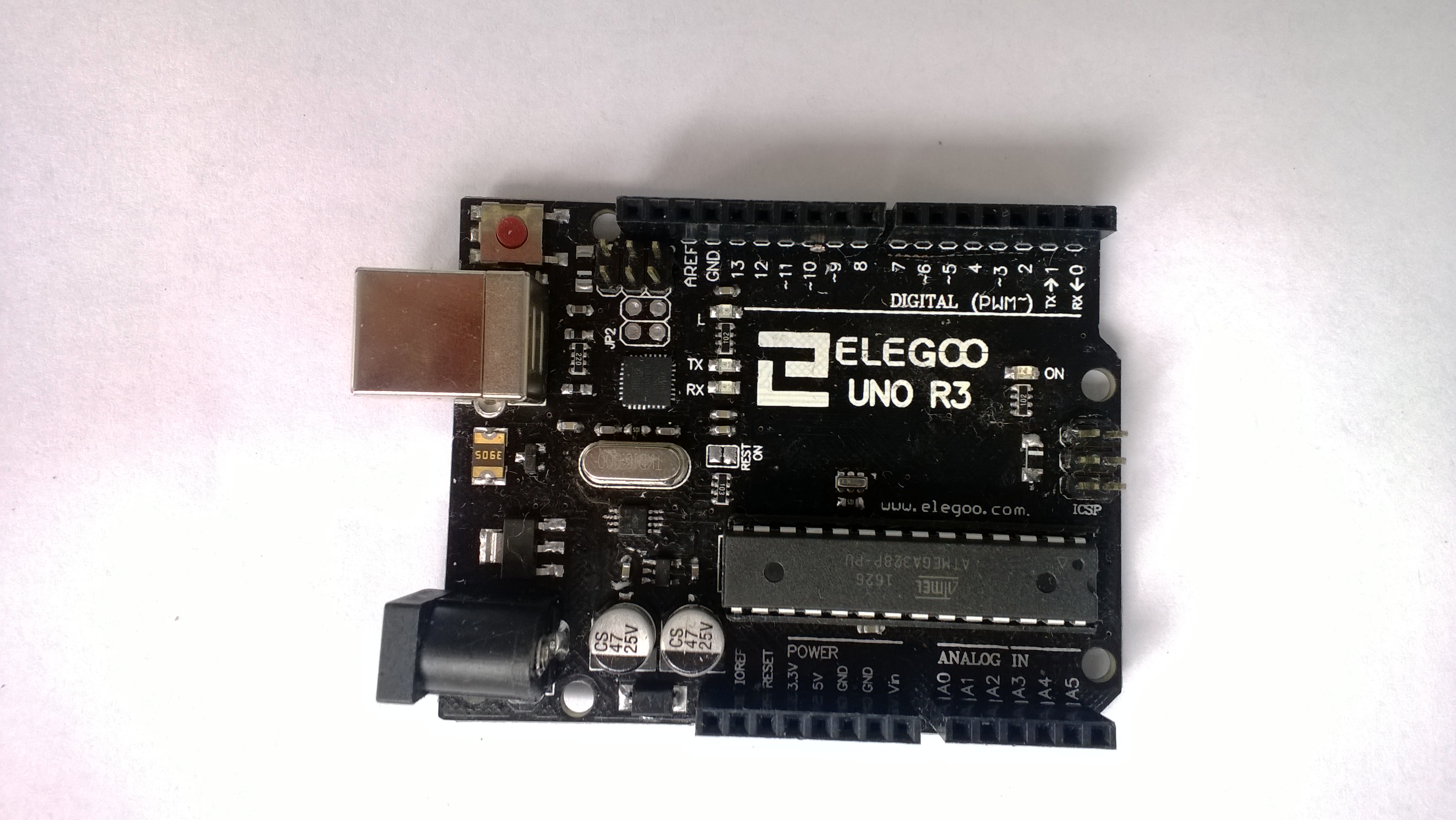

AVR (Atmel - 1996)

The AVR series are becoming very popular since it is the processor used in most Arduino boards. It is also a multi functional chip with a lot of built in hardware options. It is also used in many reprap 3D-printers. The processors used in some CubeSats, including CubeSat kits from Interorbital Systems , are also AVR architecture processors. Atmel has released a radiation hardened AVR for space applications. The radiation tolerant version of the ATmega128 is the ATmegaS128.

Here is an AVR Assembler Instructions listing including details of the processor architecture.

The AVR has a modified Harvard architecture with separate address spaces for program memory and data memory. I/O has separate instructions, but is mapped to a subset of the data memory space. It addresses its registers like it is addressing low data memory address locations. Depending on the variety, the AVR has built-in digital I/O, timers, A/D converters, PWM generator, and serial communications channels. It includes integer multiply and divide instructions. The AVR uses little endian storage. In other words, if a data word is located at address xxx4h, then the low byte of that data word is located at address xxx4h, and the high byte of that word is located at address xxx5h.

ATtiny13A-PU picoPower AVR Microcontroller

for Android smartphones

for Android smartphonesAssemblers for 8 and 16-bit CPUs

Compilers for 8 and 16-bit CPUs

Debuggers for 8 and 16-bit CPUs

This page was previously hosted on geocities as www.geocities.com/rjkuhn_2000/software.htm. That old page has been copied and replicated in a number of other locations on the world wide web, but those other locations are snapshots and are not updated.

Text Copyright (C) 2000 - 2002, 2017 - 2025 R. J. Kuhn. Please note that you are not allowed to reproduce or rehost this page without written permission.